ZFS Volblocksize Round 2

Round 2! #

I did a little bit more testing on volblocksizes. This time I discovered a faster way to switch between each size. I just added a new disk to my existing fileserver VM after changing the pool-wide volblocksize in the Proxmox GUI. Run the test, delete the disk, change the size again, repeat.

The fileserver VM is stored on a stripe of 2x 4TB ssd.

I simulated some different fileserver workloads by copying various types of files from my Windows 11 desktop to the share. The desktop is connected via 10GbE and has a fast nvme.

Here are the transfer times for each run:

| Transfer Time | Size and Type | Volblocksize | Number of Files | Average File Size | Average Speed (MB/s) |

|---|---|---|---|---|---|

| 0:13 | 6.2 GB zip file | 64k | 1 | 6.2 GB | 477.54 |

| 0:13 | 6.2 GB zip file | 8k | 1 | 6.2 GB | 477.54 |

| 1:38 | 6.3 GB EverQuest folder | 64k | 18535 | ~350 KB | 64.29 |

| 1:55 | 6.3 GB EverQuest folder | 8k | 18535 | ~350 KB | 54.74 |

| 1:24 | 8.5 GB Resident Evil 5 | 64k | 13692 | ~640 KB | 101.19 |

| 1:37 | 8.5 GB Resident Evil 5 | 8k | 13692 | ~640 KB | 87.63 |

| 2:18 | 29.1 GB raw videos | 64k | 2 | 14.55 GB | 210.76 |

| 2:31 | 29.1 GB raw videos | 8k | 2 | 14.55 GB | 193.69 |

These results were the opposite of what I expected. I would have expected 8k volblocksize to be faster for the 2 game folders, which contain a ridiculous number of tiny files. I would also expect the difference to be larger in the raw video transfers. Let's test more.

Crystaldiskmark works on network shares, so I tried that.

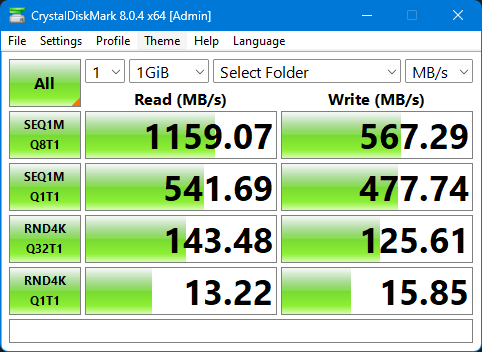

8k:

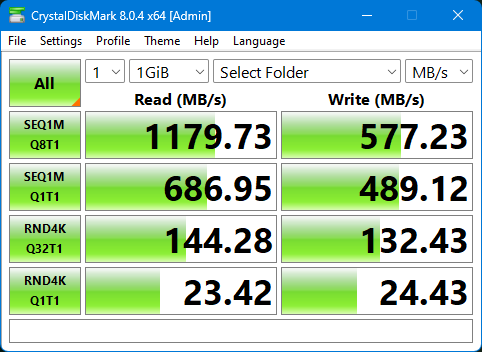

64k:

The speed increase for 1M operations makes sense to me when switching to 64k volblocksize. The 54% speed increase in 4k operations makes less sense to me. These results align with the benchmarks on the 4 spinning disks in the last post, but they are still surprising to me!

What have I learned so far? #

Well, it's still pretty confusing. 64k seems to be universally faster than 8k, but probably causes a decent amount of write amplification for small writes typical of VM workloads.

After all this testing, I am getting interested in possibly moving away from the fileserver-in-a-VM setup. It has been pretty a pretty elegant solution, being able to backup, restore, and migrate the entire fileserver so quickly and easily. I think I can still get most of the same functionality with a plain ZFS dataset mounted in a container, using zfs send/receive to back it up to the Proxmox Backup Server. It can probably be automated pretty easily with a script and be faster and better than what I'm doing.

This has been pretty fun to learn so far! Switching up my approach will allow me to learn even more about zfs snapshots and how to manage them. It will also reduce backup and restore times even further. Currently, the backup server relies on dirty-pages to know which files have changed for each backup. If the VM has been restarted (or is currently offline), that means it still has to scan the full zvolume! Excited to set up a container and start testing it soon.

- Previous: Second Brain activated

- Next: Thinkpad Backup Script